The implementation of semantic news aggregator with powerful search

the Purpose of this article is to share experiences and ideas of the project implementation based on full conversion of texts semantic representation and semantic (semantic) search on the resulting knowledge base. We will focus on the fundamentals of functioning system, the technologies used, and issues encountered in its implementation.

the Purpose of this article is to share experiences and ideas of the project implementation based on full conversion of texts semantic representation and semantic (semantic) search on the resulting knowledge base. We will focus on the fundamentals of functioning system, the technologies used, and issues encountered in its implementation.the

Why?

Ideally, a semantic system "understands" the content of the processed articles in the form of semantic concepts and highlights of their major ("what"). It gives a huge opportunity for more accurate clustering, automatic annotation and semantic search, when the system is looking for are not the words of the query and the meaning behind these words.

Semantic search is not only the answer to the meaning of typed in a search line the phrase, but in General the way the user interacts with the system. A semantic query can be not only a simple concept or phrase, but the document — the system produces semantically related documents. Profile of user's interests is also the semantic query and is able to operate in the "background" in parallel with other queries.

Response to the semantic query in the General case consists of the following components:

the

-

the

- Direct answer to the question and other information relating to the requested and related concepts.

the - the Semantic concepts of the semantically related concepts of the query, which may be a response to the question, and a means to "clarify" request.

the - Text documents, multimedia objects, links to sites on the topic that reveal and describe the requested semantic concept.

News aggregator – the most convenient news app for developing such a semantic approach. You can build a working system with a relatively small amount of the processed text and the highest allowable level of processing errors.

the

Ontology

When choosing an ontology, the main criterion was ease of use for building a semantic parser of the text and for the efficient organization of search. To simplify the system, the assumption was made that you can not handle, or handle with a large acceptable level of errors contained in the text information, which is assumed to be very important for the search task (supporting information).

In our ontology, simple semantic concepts (objects) can be divided into the following classes:

-

the

- Material objects, people, organizations, intangible objects (e.g., movies), geographic objects, etc. the

- Action figures ("sell", "inflation", "make"). the

- Features ("large", "blue"), let's call them attributes. the

- Periods of time, numeric information.

The basis of the information contained in the text is "nodes" formed meaningful combinations of concepts of the second class (action) and first class. Different kinds of objects and fill the empty valence (roles) (e.g., price – what product? where? from which seller?). We can say that the first class objects specify konkretisiert actions and indicators (price – oil). As a "utoobasaurus" object can be not only objects of actions, but also first class objects ("Russian"). This approach is similar to widely known in the Western computational linguistics frames (Framenet).

Nodes can include one another, when one node fills an empty role to another node. As a result, the text is converted to a system nested within other nodes.

Features applied to the semantic notions of first and second class, generally can be considered "secondary" information in relation to the search task. For example, in the expression "saved the low oil prices","stable oil supplies to Europe," the italicized attributes have less importance, the other objects. Such information is not included in the nodes, and is tied to him being bound to a particular place in the document. Similarly to nodes attached numerical information and time periods.

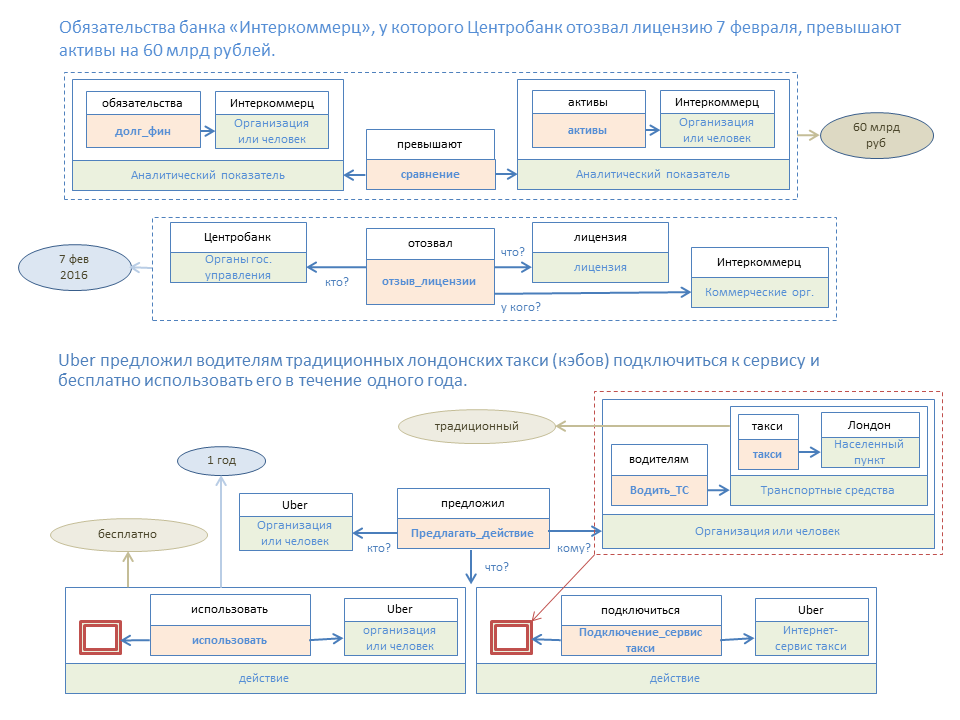

The figure below illustrates the semantic transformation of two simple phrases. The colored rectangles are the elements of the templates, nodes, and rectangles on them – elements of the node built with this template.

With this approach, we have two kinds of information:

the

-

the

- a Particular node exists ("oil prices"). Drive such nodes will be called "knowledge Base". the

- This node exists in certain areas of documents with specific attributes, numeric values and periods of time.

This separation we do to simplify and speed up searching information, when typically first look for the relevant query nodes, and then the resulting data can be filtered by the auxiliary parameters.

the

text to semantic representation

The main task of semantic text – to structure which contains objects in the form of a set of matching nodes. For this we use a template system nodes in which each element is subjected to the condition to a valid object type. Types form a tree graph. When the site template is set for a given role to a specific object type, then this role may be suitable all objects of the same type or "subordinate" types.

For example, under "commercial operation" active object (the seller or the buyer) may be an object of type "person or organization", as well as the underlying objects of all types (companies, shops, cultural institutions, etc.). In site templates start and syntactic constraints. Unlike most other systems of semantic analysis of texts, we do not do pre-parsing with the formation of a network of syntactic dependencies, and apply syntactic constraints in parallel with the semantic analysis.

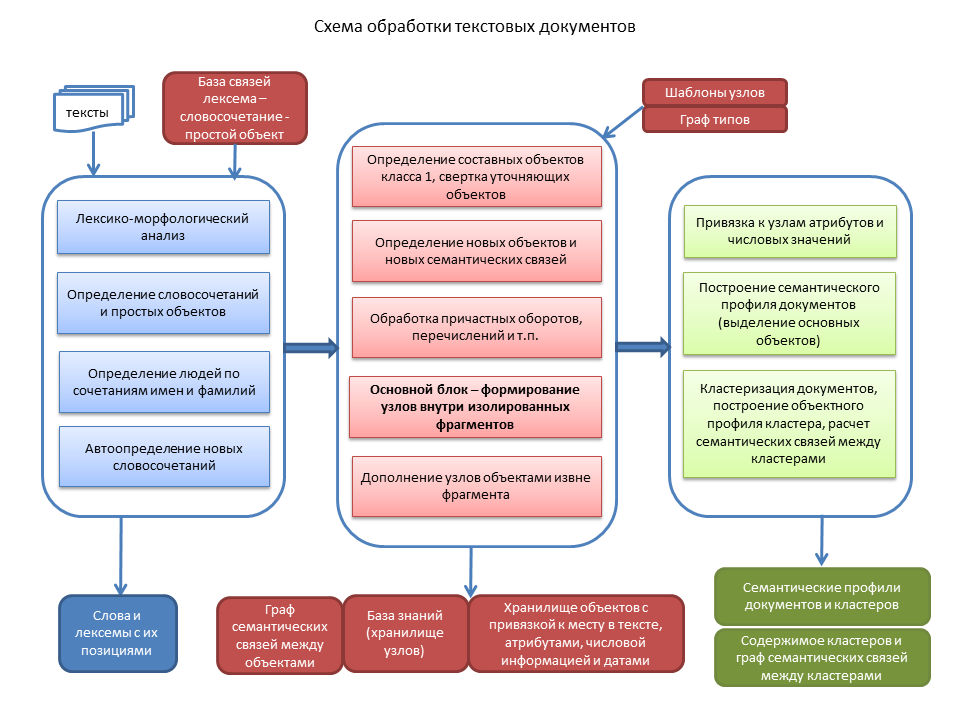

Briefly explain the main stages.

First the identification of simple features, which are defined by single words or known phrases. Next, you define combinations of names and surnames as guidance for people, and running the algorithm for the analysis of separate words and sequences of words that may be unknown to the system objects.

In the second stage generated by nodes based on the objects of class 1 of to lookup their objects. Phrases like "the General Director of the Moscow trading company "Horns and hoofs" are collapsed into a single object. Contained in these sites complement the information ("Moscow" as an indication of the location and "shopping" as a sign of the industry in this example) can be added to the count of semantic relations for the company. In the next Chapter, a graph of semantic connections take a closer look.

Then, the text needs to be structured in the form a sequence of independent fragments, each of which usually contains a certain phrase based on the verb, and ideally should fold in a single node, which may include other nodes. Processed participle and other constructions, and enumeration of objects of class 1, including the nodes, turn to special objects.

Then, for each fragment is the search for matching nodes based on the objects of class 2 of. If one utoobasaurus object formed multiple nodes are those that include the maximum number of objects in the fragment. Thus, based on the type of objects in the environment with a shift from semantically wide objects like the "to go" to the site, have a clear semantic meaning. If primary processing at the place of homonyms, there were several parallel objects, after this treatment, only those objects that entered the node (i.e., semantically consistent with the neighboring objects).

The last block conversion to semantic representation – the account objects that are in the text removed from usaopoly objects, but the meaning is implied. For example, "Moscow heat, the rain. Tomorrow colder and it will snow". Semantic analysis of the end of the sentence leaves vacant the role of geographical object, and a number of characteristics, you can determine what is "Moscow".

When the nodes are fully formed, they bind the attributes, numeric information and time periods. A typical situation where a time period is specified in only one place in the text, but refers to multiple nodes throughout the text. You have to use a special algorithm for allocation of periods across all nodes where the "missing" period of time based on their semantic meaning.

Finally, each document-defined basic objects (the"what" of this document). In addition to the number of occurrences, counted the participation of properties in nodes of different types.

Having rich semantic information, it is possible to build a fairly accurate measure of the semantic proximity of the documents. The consolidation of documents in the cluster do when you exceed the measure of semantic proximity a certain threshold. The generated semantic profiles of the clusters (main cluster objects, it is usually search) and the network of semantic connections between clusters, which allows to display "cloud" of documents that are associated in meaning with a specific document.

How does semantic search

Algorithm semantic search consists of the following main blocks:

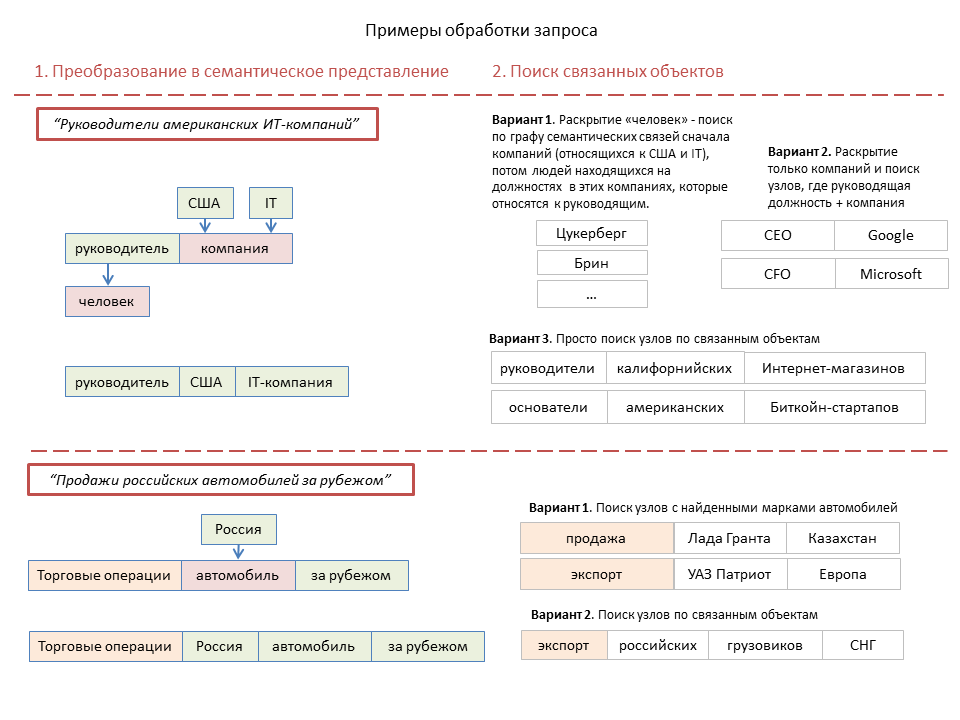

First, if a text query, then you need to convert it to a semantic representation. Differences from the above-described algorithm of text processing documents are dictated primarily by the need for very fast execution of the search query. Therefore, no nodes are generated and allocated one or more blocks consisting of potentially utoobasaurus object and a set of objects based on their type and location in the query, I can relate to this utoobasaurus.

This may be formed by several parallel combinations, one of which in the next step you need to uncover through the knowledge base combinations such as "Moscow" in the list of specific objects, and the other is not necessary.

The next step – search for semantically related objects and components. For a single object class 1 is the selection of semantically related objects. In the case of combinations of "action + object" searching for nodes that have the same or a sub type utoobasaurus object, and having in its composition of objects, matching or semantically associated with the query objects. Also, made the disclosure in a list of specific objects, combinations of the type "the Moscow company" or "Europe".

Here we use a tree graph of semantic relationships between objects. The construction concept is simple — to a particular object snap those "subordinate" objects, which should be considered in the search on this object. For example, cities are subordinate to States, political leaders are also subject to States, companies are subject to countries or cities, heads of companies subject to the companies. For material objects, this graph is built from more General concepts to private and partly coincides with the graph types.

For a number of objects the number of "subordinates" can be very large and there is a need to select the most important. For this purpose between the graph elements, set the numerical factor semantic relations, which is calculated on the basis of importance of the objects. For different types of objects, the significance is determined differently, for example, for companies – on the basis of economic indicators (turnover) or the number of employees, the geographic features – the number of the population.

Further, simple features and components from the output of the previous stage, looking in the object cluster profile. If found little clusters, searching in the object profile of documents.

If the search query contains objects attributes (characteristics), there is additional filtering of the documents found in the existing linked to the found nodes of the desired attributes. If the request is token, for which there were no transition to semantic objects, semantic search is supplemented by a General text search on the tokens.

Finally, Rangiroa the found clusters and the documents generated snippets, and other elements of issuance (references to related objects, etc.). Ranking is usually according to the degree of semantic relationship between query subjects and objects through which documents are found. Also, the ranking can be considered a semantic profile of user's interests.

Before performing a complex query, you need to analyze the complexity of processing different components, and build implementation so that in the process of treatment there were fewer intermediate objects or documents. Therefore, the processing order may not always correspond to that described above. Sometimes it may be advantageous to first find the documents on the basis of the query, and then contained objects filtered in relation to the rest of the query.

A separate algorithm is required for "broad" query "economy", "politics", "Russia", etc., which are characterized by a very large number of related objects and relevant documents.

For example, the object "policy" related:

the

-

the

- People-politicians – holding high public office, or authoritative experts the

- Organizations — political parties, bodies of state power. the

- a Series of events and actions (elections, appointments to certain positions, activities of the state Duma, etc.).

In this case, the search lead to a relatively small number of topical clusters with a high degree of importance, and Rangiroa them by the number of fresh documents in the cluster.

the

the Main problems of implementing this approach and their solutions

the Problem 1. The system must "know" all the objects which are found in the texts.

Possible solutions include the following:

the

-

the

- Application of semantic systems in areas where the ignorance or errors of identification of rare and little-known objects is not critical.

the - Fix objects from existing databases of structured information (DBpedia, Rosstat, etc.)

the - the Use of simple algorithms for the automatic identification of the type of object on clarifying words (e.g., "film "the Martian""), automated identification of persons, as well as phrases that may be unknown to the system objects. With a low probability of error objects in the database start automatically, in cases of high probability of errors use a system of manual checks.

the - To identify objects considering the possibility to use machine learning, training the system on a sample of already known objects, and based on the semantic type of objects surrounding an unknown object.

the Problem 2. How to create templates for all possible semantic nodes.

In solving similar problems of the distribution of objects according to semantic roles English systems, SRL (Semantic Role Labeling) used a machine learning algorithm using the already annotated corpus. As a system of structures "action + role" is used, for example Framenet. However, for the Russian language there is no suitable spaced of the housing. In addition, the implementation of this approach has its problems, discussion of which is beyond the scope of this short article.

In our approach, as described above, the distribution of objects to roles is based on matching the types of objects to the semantic restrictions required for the roles in the template nodes. The system is now about 1700 templates, most of which was generated semi-automatically on the basis of Framenet frames. However, the semantic constraints for roles have mostly set manually, at least for the most frequent nodes.

You can try the automatic generation of nodes using machine learning on the basis already generated. If there is a certain combination of objects and words (unknown system) with certain syntactic characteristics, it is possible to form knots similar to the existing ones. Although these nodes will still need to manually do the template, the presence of such a node would still be better than none.

the Problem 3. The high computational complexity of many semantic queries.

Some requests may involve processing a very large number of intermediate objects and nodes, and to run slowly. This problem is quite solved technical methods.

the

-

the

- Required parallel query execution. the

- Analysis of complexity, different execution paths of a query and selection of the most optimal. the

- Using the numerical coefficients in the graph of semantic relationships allows you to limit the number of objects that are involved in intermediate stages of query processing.

the

recommended reading

the

-

the

- a series of articles on Habre technology ABBYY Compreno. the

- a Good overview the book: "Semantic Role Labeling", Martha Palmer, Daniel Gildea, and Nianwen Xue, 2010. the

- Dipanjan Das, Desai Chen, André F. T. Martins, Nathan Schneider, Noah A. Smith (2014) Frame-Semantic Parsing.

Комментарии

Отправить комментарий