Options for building highly available systems on AWS. Overcoming disruptions. Part 1

Even such monsters of the cloud industry like Amazon problems occur with the equipment. In connection with the recent disruptions in the US East-1 data center, this article might be helpful.

Options for building highly available systems on AWS. Overcoming outages

Fault tolerance is one of the main characteristics of all cloud systems. Every day many applications are designed and deployed on AWS without taking into account this characteristic. The reasons for this behavior can vary from technical lack of knowledge in how to design a fault tolerant system up to the high cost of creating a full-fledged high-availability systems within AWS. This article highlights several solutions that will help to overcome the disruptions of hardware providers and create a more suitable solution within AWS infrastructure.

The structure of a typical web application consists of the following levels: DNS, Load Balancer, web server, application server, database, cache. Let's take this stack and consider in detail the main points you need to consider when building highly available systems:

the

Part 2

High availability at level a web server / application server

In order to exclude a component having a single point of failure (SPOF — Single Point of Failure), it is common practice to run web applications on two or more instances of EC2 virtual servers. This solution allows to provide higher fault tolerance compared to using one server. Application server and web servers can be configured using the health check, and without it. Shown below are the most common architectural decisions for highly available systems using inspection:

The key points that you need to pay attention when building this system:

the

Article based on information from habrahabr.ru

Options for building highly available systems on AWS. Overcoming outages

Fault tolerance is one of the main characteristics of all cloud systems. Every day many applications are designed and deployed on AWS without taking into account this characteristic. The reasons for this behavior can vary from technical lack of knowledge in how to design a fault tolerant system up to the high cost of creating a full-fledged high-availability systems within AWS. This article highlights several solutions that will help to overcome the disruptions of hardware providers and create a more suitable solution within AWS infrastructure.

The structure of a typical web application consists of the following levels: DNS, Load Balancer, web server, application server, database, cache. Let's take this stack and consider in detail the main points you need to consider when building highly available systems:

the

-

the

- Building highly available systems on AWS the

- High availability at level a web server / application server the

- High availability load balancing / DNS the

- High availability at the database level the

- Building highly available systems across availability zones AWS the

- create a highly available system between regions of AWS the

- Building highly available systems across cloud and hosting providers

Part 2

High availability at level a web server / application server

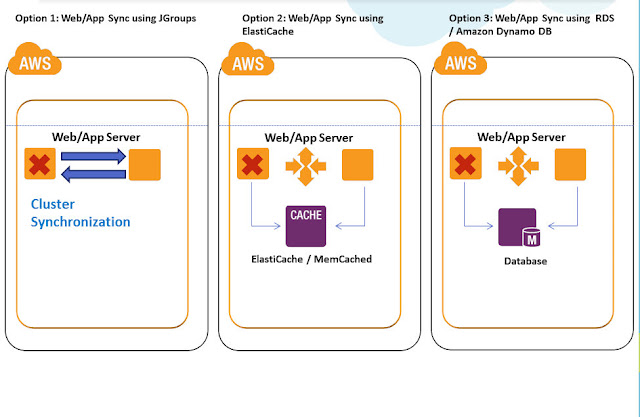

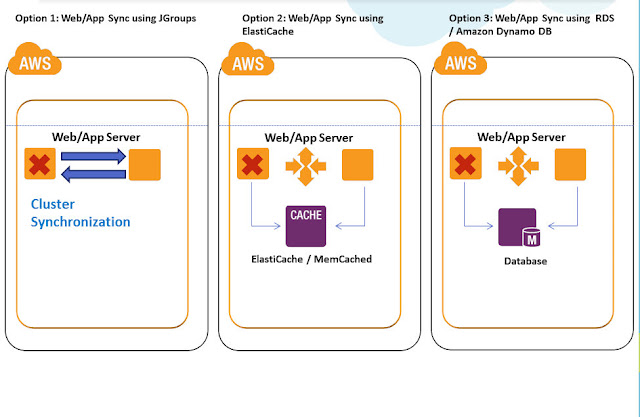

In order to exclude a component having a single point of failure (SPOF — Single Point of Failure), it is common practice to run web applications on two or more instances of EC2 virtual servers. This solution allows to provide higher fault tolerance compared to using one server. Application server and web servers can be configured using the health check, and without it. Shown below are the most common architectural decisions for highly available systems using inspection:

The key points that you need to pay attention when building this system:

the

-

the

- because the current AWS does not support Multicast Protocol at the application level, data must be synchronized using conventional Unicast TCP. For example, for Java applications you can use JGroups, Terracotta NAM or similar software to synchronize data between servers. In the simplest case, you can use one-way synchronization with rsync, more versatile and reliable solution is the use of network distributed file systems such as GlusterFS. the

- To store user data and information about sessions you can use Memcached EC2, ElastiCache or Amazon DynamoDB. For greater reliability, you can deploy ElastiCache cluster in different availability zones AWS. the

- Using ElasticIP to switch between servers, it is not recommended for highly critical systems, as this process can take up to two minutes. the

- User data and sessions can be stored in the database. To use this mechanism should be cautious, it is necessary to evaluate possible delays when read/write to the database. the

- users can upload files and documents should be stored on network file systems such as NFS, Gluster Storage Pool or Amazon S3. the

- Needs to be included the policy of fixing sessions at the level of Amazon ELB or reverse proxies if session is not synchronized through a single repository, database, or other similar mechanism. This approach provides high availability, but does not provide fault tolerance at the application level.

- Multiple Nginx or HAproxy can be configured for high availability in AWS, these services can determine the service availability and distribute the requests among the available servers the

- Horizontal scaling of load balancers for vertical scaling. Horizontal scaling increases the number of individual machines performing the function of balancing, eliminating a single point of failure. For scaling of load balancers like Nginx and HAproxy need to develop their scripts and system images, used to scale Amazon AutoScaling is not recommended in this case. the

- To determine the availability of the server load balancer you can use Amazon CloudWatch monitoring or third party monitoring services such as Nagios, Zabbix, Icinga and in case of unavailability of one of the servers using scripts and command-line tools to manage EC2 to launch a new server instance to a load balancer within a few minutes.

- Building highly available systems across availability zones AWS the

- create a highly available system between regions of AWS the

- Building highly available systems across cloud and hosting providers

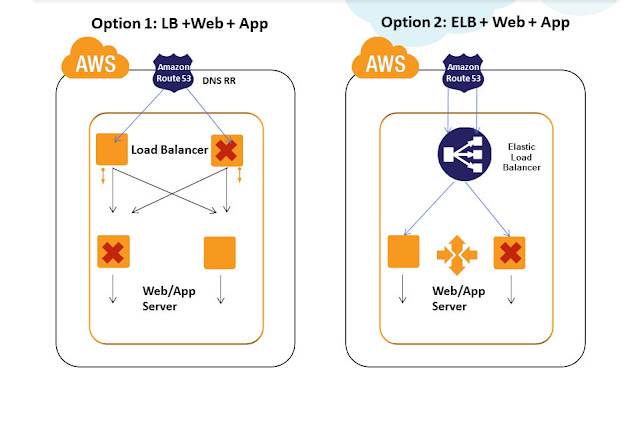

High availability load balancing / DNS

The level of DNS/Load Balancing is the main entry point for the web application. There is no sense in building complex clusters of severe replicated web farms in the application level and the database without building a highly available system at the level of DNS/LB. If the load balancer is a single point of failure, then its failure will disable the entire system. Below are the most common solutions for high availability at the level of the load balancer:

1) Use Amazon Elastic Load Balancer as a load balancer for high availability. Amazon ELB automatically distributes the application load across multiple EC2 servers. This service gives you the opportunity to achieve more than the ordinary fault tolerance, it provides a smooth increase in resources between which the distributed load depending on the intensity of the incoming traffic. This allows us to provide service to several thousand simultaneous connections and thus can flexibly expand, with increasing load. ELB is inherently fault-tolerant unit which can fix the faults in their work. When the load increases, at the level of the ELB automatically added to the additional virtual machine ELB EC2. This automatically eliminates single point of failure and all the load balancing mechanism continues to work even if some virtual machines ELB EC2 fail. As Amazon ELB automatically detects the availability of services, between which it is necessary to distribute load and in case of problems automatically routes requests to available servers. Amazon ELB can be configured as load-balancing using random allocation Round Robin, without checking the status of services and using the mechanism of fastening of sessions and checking the status. If synchronization of sessions are not implemented, even using fixing sessions may not provide the lack of occurrence of application errors when one of the servers and redirect users to an available server.

2) Sometimes applications require:

— Complex load-balancing with possibility of caching (Varnish)

— The use of algorithms for load distribution:

— A minimum of connections (least connection) – servers with fewer active connections get more requests

— A minimum of connections with weights (Weighted Least-Connections) -servers with fewer active connections and greater capacity get more requests.

— The distribution of the source (Destination Hash Scheduling)

— Distribution by recipient (Source Hash Scheduling)

— Distribution based on placement and minimum connections (Locality-Based Least-Connection Scheduling) — More requests are to servers with fewer active connections by IP addresses

— To provide for large short-term spikes

— The fixed IP address of the load balancer

In all of these cases, the use Amazom ELB is not suitable. It is better to use a third-party load balancers or reverse proxies such as Nginx, Zeus, HAproxy, Varnish. But you need to ensure that no single point of failure, the simplest solution to this problem is to use multiple load balancers. Reverse proxies Zeus already has built-in functionality for working in the cluster for other services it is necessary to use round-Robin distribution Round Robin DNS. Let's look at this mechanism in more detail, but first let's define a few key points that you should consider when building a reliable distribution system load from AWS:

the

-

the

Now let us discuss the level that is above the balancer – DNS. Amazon Route 53 is a highly available, reliable and scalable DNS service. This service can effectively allocate user queries to all Amazon services such as EC2, S3, ELB and out of AWS infrastructure. Route 53 is essentially alcazarquivir managed DNS server can be configured both via CLI and via the web console. The service supports both cyclical and the weight distribution of the load and can distribute requests between the separate EC2 servers within the load balancer, and Amazon ELB. When using a cyclic distribution, the verification of availability of service and change requests to available servers does not work and must be submitted on the application level.

High availability at the database level

Data is the most valuable part of any application and designing high availability at the database level is the most priority in any highly available system. To exclude single point of failure at the database level, it is common practice to use multiple database servers with data replication between them. This can be as the use, of cluster and the scheme Master-Slave. Let's look at the most popular solutions to this problem from AWS:

1) Use replication Master-Slave.

We can use one EC2 server as the primary (master) and one or more secondary servers (slave). If these servers are located in a public cloud, you need to use ElastcIP, if you use private cloud (VPC) access between servers can be done through private IP addresses. In this mode, the server basay data can use asynchronous replication. When the primary database server fails, we can switch the secondary server to the Master mode using the scripts, so ensuring high availability. We can force replication between servers in the Active-to-Active or Active-to-Passive. In the first case, a write operation, the operation is an intermediate writing and reading must be performed on the primary server, while read operations must be performed on the secondary server. In the second case, all reads and writes must be performed only on the primary server, and secondary server only in case of unavailability of the primary server after the secondary server switches to the Master mode. It is recommended to use EBS images for EC2 database servers to ensure reliability and stability at the level of the disk. To provide additional performance and data integrity, you can configure the EC2 server databases with various options of RAID arrays from AWS.

2) MySQL NDBCluster

We can adjust two or more MySQL EC2 servers as SQLD nodes and data, for storing data and one controlling the MySQL server to create the cluster. All data nodes in the cluster can use asynchronous replication to synchronize data among themselves. Reads and writes can be simultaneously distributed among all storage nodes. When one of the storage nodes in the cluster fails, the other becomes active and handles all incoming requests. If you are using a public cloud that is ElasticIP addresses for each server in the cluster, if you use private cloud, you can use internal IP addresses. It is recommended to use EBS images for EC2 database servers to ensure reliability and stability at the level of the disk. To provide additional performance and data integrity, you can configure the EC2 server databases with various options of RAID arrays from AWS.

3) Use availability zones in conjunction with RDS

If we use Amazon RDS MySQL for the database level, we can create one Master server in one availability zone and one Hot Standby server in another availability zone. Additionally we can have multiple secondary Read Replica servers in multiple availability zones. The primary and secondary nodes RDS can use synchronous replication of data between a Read Replica, the server uses asynchronous replication. When the Master RDS servers is unavailable, the Hot Standby automatically becomes available at the same address within a few minutes. All write operations, intermediate reading and writing must be performed on the Master server and read operations can be performed on Read Replica servers. All RDS services use EBS images. The RDS provides automated backups and it can restore data from a certain point, the same RDS can work within a private cloud.

The remaining items will be considered in the second part:

the

-

the

Original article: harish11g.blogspot.in/2012/06/aws-high-availability-outage.html

Author: Harish Ganesan

Комментарии

Отправить комментарий