Unkillable Postgresql cluster inside the Kubernetes cluster

If you ever wondered about trust and hope, it is likely, had this to anything as much as database management systems. Well, really, it's a Database! The title contains the whole point — the place where data is stored, the main task to STORE. And the saddest thing, as always, once these beliefs are divided about the remains of this one who died for database 'prod'.

what to do? — you will ask. Not to deplot on the server, nothing, — we answer. Anything that is not able itself to repair, at least temporarily, but securely, and quickly!

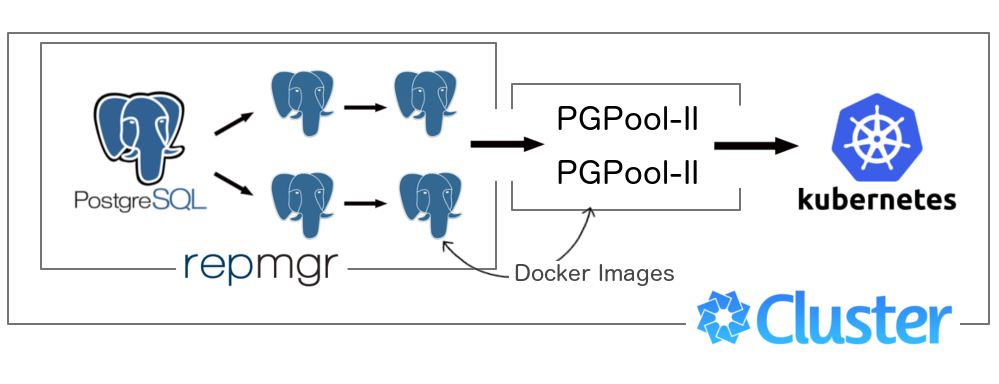

In this article I will try to tell about your experience settings almost immortal Postgresql cluster within the other failover solutions, but Google's Kubernetes (k8s aka)

the

Contents

the Article was published a little more than expected, but because the code in many links, mostly on code, in which there is a speech.

Additionally, I attach a table of contents, including impatient to go directly to the results:

the

-

the

- Task the

- Solution method "Gugleniya"

the

the - Consolidate and build solutions

the

the - Result the

- Documentation and material used

the

Task

the Need to have a repository with the data for almost any application need. And to have this vault is resistant to the adversities in a network or on physical servers — good tone of a competent architect. Another aspect is the high availability of the service even with large competing requests for service that means easy scaling if necessary.

we will have problems to solve:

the

-

the

- Physically distributed service the

- Balancing the

- Not limited to zoom by adding new nodes the

- Automatic recovery when it crashes, destruction and loss of communication nodes the

- No single point of failure

Additional paragraphs, due to the specific religious beliefs of the author:

the

-

the

- Postgres (the most academic and consistent solution for RDBMS free available) the

- Docker packaging the

- Kubernetes infrastructure

On the diagram it will look like this:

the

master (node1 primary) --\

|- slave1 (node2) ---\ / balancer \

| |- slave2 (node3) ----|---| |----client

|- slave3 (node4) ---/ \ balancer /

|- slave4 (node5) --/given the input

the

-

the

- a Greater number of read requests (to record) the

- Linear increase in load with peaks up to x2 from medium

the

Solution method "Gugleniya"

Being experienced in solving IT problems, a human being, I decided to ask the collective mind: "postgres cluster kubernetes" is a bunch of rubbish, "postgres docker cluster" is a bunch of rubbish, "postgres cluster" — some variants of which had woat.

What upset me is the lack of sane Docker builds and description of any option for clustering. Not to mention Kubernetes. By the way, the Mysql options were not many, but it was. At least like an example in the official repository k8s Galera(Mysql cluster)

Google has made it clear that the problem will solve itself and in the manual mode..."but even through disparate tips and articles" — I exhaled.

the

and nepreemlemo Bad decisions

will Immediately notice that all of the items in this section can be subjective and it is even viable. However, relying on their experience and instinct, I had to cut off.

When someone makes a universal solution (for anything), I always think that such things are cumbersome, unwieldy and hard to maintain. The same happened with Pgpool, which is able almost everything:

the

-

the

- Balancing the

- Storage packs connections for optimizing connections and speed of access to the database the

- Support replication options (stream, slony) the

- Auto-detect Primary server to record, which is important for the reorganization of the roles on the cluster the

- Support failover/failback the

- Self-replication master-master the

- Coordinated work of multiple nodes Pgpool-s to eliminate single points of failure.

the First four items I have found useful, and stopped at that, realizing and considering the problems of the other.

the

-

the

- Restore using Pgpool2 does not offer any system of decision-making about the next master — all logic must be described in the commands failover/failback the

- recording Time, replication master-master, reduced to twice compared to the option without it, regardless of the number of nodes... well, at least not growing linearly the

- How to build a cascading cluster (when one reads the slave with the previous slave) — do not understand the

- of Course, well, that Pgpool knows about his brothers and quickly can become an active link in case of problems on the adjacent site, but the problem for me, solves Kubernetes, which guarantees the same behavior, in General, any service installed in it.

Actually, also reading and comparing found with the familiar and work out of the box streaming replication (Streaming Replication), it was easy for me not to think about Elephants.

Yes, and everything else on the first page of the project website, the guys write that with postgres 9.0+ you don't need Slony in the absence of some specific system requirements:

the

-

the

- partial replication the

- integration with other solutions ("Londiste and Bucardo") the

- additional behavior when replicating

Actually, I think Slony is not a cake... at least if you don't have these three specific tasks.

Look around and understand the options approach the ideal two-way replication, it was found that victims are not compatible with life certain applications. Not to mention the speed, there are limitations in the work with transactions, complex queries (SELECT FOR UPDATE).

It is likely, I'm not so tempted in this matter, but what he saw was enough for me to abandon that idea. And scattered brains, it seemed to me that for a system with enhanced write operation need completely different technology and not relational database.

the

to Consolidate and build the solution

In the examples I will speak about how fundamentally should look like the solution, and in the code — as it happened with me. To create a cluster do not necessarily have a Kubernetes (there is an example docker-compose) or Docker in principle. Just then, all the steps will be useful, not as a solution of the type CPM (Copy-Paste-Modify), but as the installation guide with snippets.

the

Primary and Standby is Master and Slave

Why colleagues from Postgresql abandoned the terms "Master" and "Slave"?.. hmm, I could be wrong, but there was a rumor that because of the non-polit-correctness, they say that slavery is bad. Well, right.

the First thing to do is to enable Primary server, followed by the first layer of the Standby, and the second — all according to the task. Hence we obtain a simple procedure to enable a normal Postgresql server mode Primary/Standby the configuration to enable Streaming Replication

wal_level = hot_standby

max_wal_senders = 5

wal_keep_segments = 5001

hot_standby = onAll the options in the review have a brief description, but in a nutshell, this configuration does the server understand that he is now part of the cluster and, if anything, should be allowed to read WAL logs other customers. Plus resolve queries during recovery. A great detailed description on setting this kind of replication can be found on the Postgresql Wiki.

once we got the first server in the cluster can turn on Stanby, who knows where his Primary.

My goal here has been to assemble a universal image Docker Image, which is included in the work, depending on the mode, like so:

the

-

the

- Primary:

the-

the

- Configure Repmgr (about it later) the

- Creates the database and user for the application the

- Creates the database and user for monitoring and replication support the

- Updates to config(

postgresql.conf) and opens access to users externally(pg_hba.conf)

the - Starts the Postgresql service in the background the

- Registered as a Master in Repmgr the

- Runs

repmgrd— the demon from Repmgr for replication monitoring (too late)

the - For Standby:

theClones a Primary server with Repmgr (among other things with all the configs, so just copy

$PGDATAdirectory)

the - Configure Repmgr the

- Starts the Postgresql service in the background after cloning service sanity realizes that he is standby and obediently follow Primary the

- is Logged as a Slave in Repmgr the

- Runs

repmgrd

For all these operations, the sequence is important, and therefore code crammed sleep. I know — not well, but it is convenient to configure the delay using ENV variables when you need time to start all containers (e.g. via docker-compose up )

All variables this way is described in docker-compose file.

the difference of the first and second layer Standby services that for the second master is any service from the first layer, not the Primary. Do not forget that the second tier should start after the first delay.

the

Split-brain and the election of a new leader in the cluster

Split brain — a situation in which different segments of the cluster can create/to elect a new Master-a and to think that the problem is solved.

This is one, but not the only problem that I helped solve Repmgr.

In fact it is the Manager who knows how to do the following:

the

-

the

- Clone Master (master — in terms of Repmgr) and automatically nastiti nevrogenny Slave the

- to Help to restart the cluster dying Master. the

- In automatic or manual mode Repmgr can to elect a new Master and and reconfigure all Slave services to follow a new leader. the

- Output from the cluster nodes the

- Monitor health of the cluster the

- Run command events inside a cluster

In our case, comes to the aid of repmgrd which starts the main process in the container and monitors the integrity of the cluster. The situation, when lost access to the Master server, Repmgr tries to analyze the current structure of the cluster and make the decision about who will be the next Master. Naturally Repmgr smart enough not to create a Split Brain situation and to choose the correct Master.

the

Pgpool-II — swiming pool of connections

the Last part of the system — Pgpool. As I wrote in the section about the bad decisions, the service still does the job:

the

-

the

- Balance the load among all cluster UZD the

- Granit the connection handles to optimize access speed to the database the

- In our case, Streaming Replication — Master automatically finds and uses it for write requests.

As a outcome, I turned fairly easy Docker Image, which at the start configures itself to work with a set of hosts and users who will have the possibility to pass md5 authorization through Pgpool (this, too, as it turned out, not everything is simple)

Very often there is a task to get rid of single points of failure, and in our case, this point is the pgpool service that proxies all requests and can become the weakest link in the way of access to the data.

Fortunately, in this case our problem is solved k8s and allows you to make as many replications of service you need.

for example, Kubernetes unfortunately not, but if you are familiar with the how does Replication Controller and/or Deployment, then pull off the above you will not be difficult.

the

Result

This is not a retelling of the scripts to solve the problem, but the description of the structure of the solution of this problem. Which means for a deeper understanding and optimization decisions will have to read code to at least README.md at github, which is meticulously step by step and tells how to start a cluster and docker-compose and Kubernetes. Everything else, for those who recognize and are resolved to move on, I'm ready to stretch virtual helping hand.

the

-

the

- Source code and documentation on GitHub the

- Docker Image for cluster-ready Postgresql example the

- Docker Image for Pgpool c configuration via ENV variables

the

Documentation and material used

the

-

the

- Streaming replication in postgres the

- Repmgr the

- Pgpool2 the

- Kubernetes

the

PS:

I Hope that the article will be useful and will give a little bit positive before the start of summer! Good luck and good mood, colleagues ;)

Комментарии

Отправить комментарий